A solid prompt doesn’t just get you a response – it gets you specific, actionable, and high-quality results on the first try.

That’s why I’ve used my “5 Keys” framework to build a library of hundreds of prompts that deliver consistently.

It’s the same system I recommend to all my PMs, product teams, and pretty much anyone who asks.

The Prompt That Should Have Worked…But Didn’t

So when Steedan – one of my sharpest students who helps me test my prompts – texted me:

“Hey, I’m not getting great results.

Your prompt isn’t working.”

I honestly thought he was joking.

He wasn’t.

And what happened next surprised both of us.

Unexpected Results from a Proven Prompt

Steedan was using the exact same set of well-crafted prompts I recommend to all my PMs.

The same ones I’ve demoed in workshops, coaching sessions, and consulting engagements.

But his results?

Generic. Vague. All over the place.

Here’s the user story prompt he used. Where he specifically asked the AI to focus on a single persona (domestic traveler) and pain of dealing with cancelling tickets.

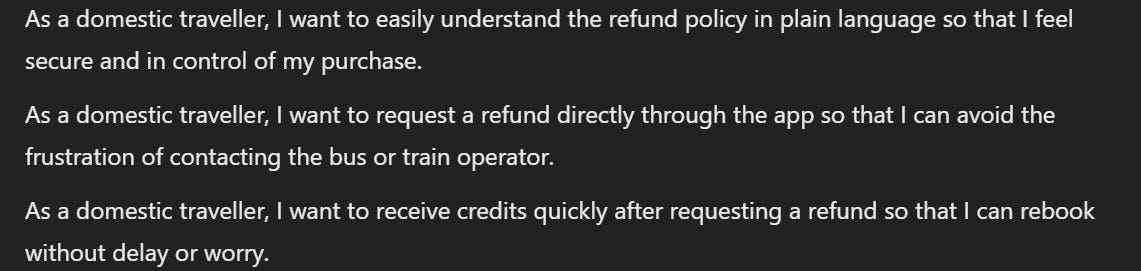

What he should have gotten are user stories focused on a single persona, that were detailed, and actionable. For example:

Notice how these stories maintain consistent focus on the domestic traveller persona while exploring different aspects of the refund experience. Each story targets a specific pain point with clear value.

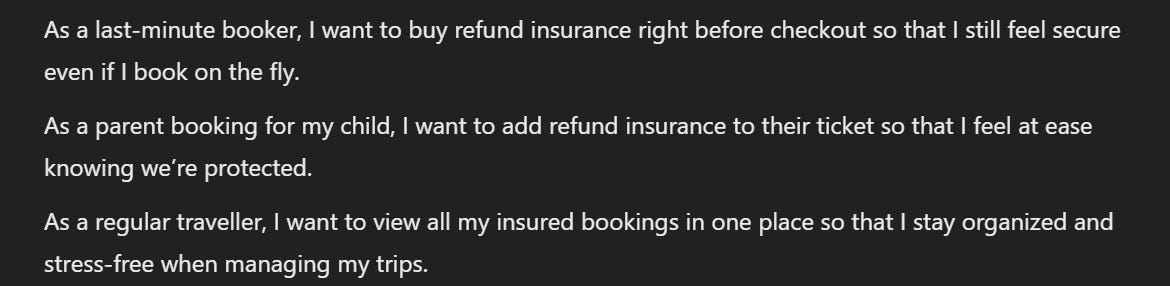

Instead what he got was generic user stories for 10 different personas.

Here’s a sample:

See the problem?

Instead of deeply exploring one user type, his results jumped between completely different personas – last-minute booker, parent, regular traveller – creating a scattered set of requirements without cohesive focus.

At that point, I knew I had to dig in. So we hopped on a call.

My First Instinct: Missing Context

My first thought? Maybe Steedan wasn’t giving the LLM enough information about his product or user.

So I took his exact input – no changes – and ran the prompt in my own account.

And boom.

It worked perfectly.

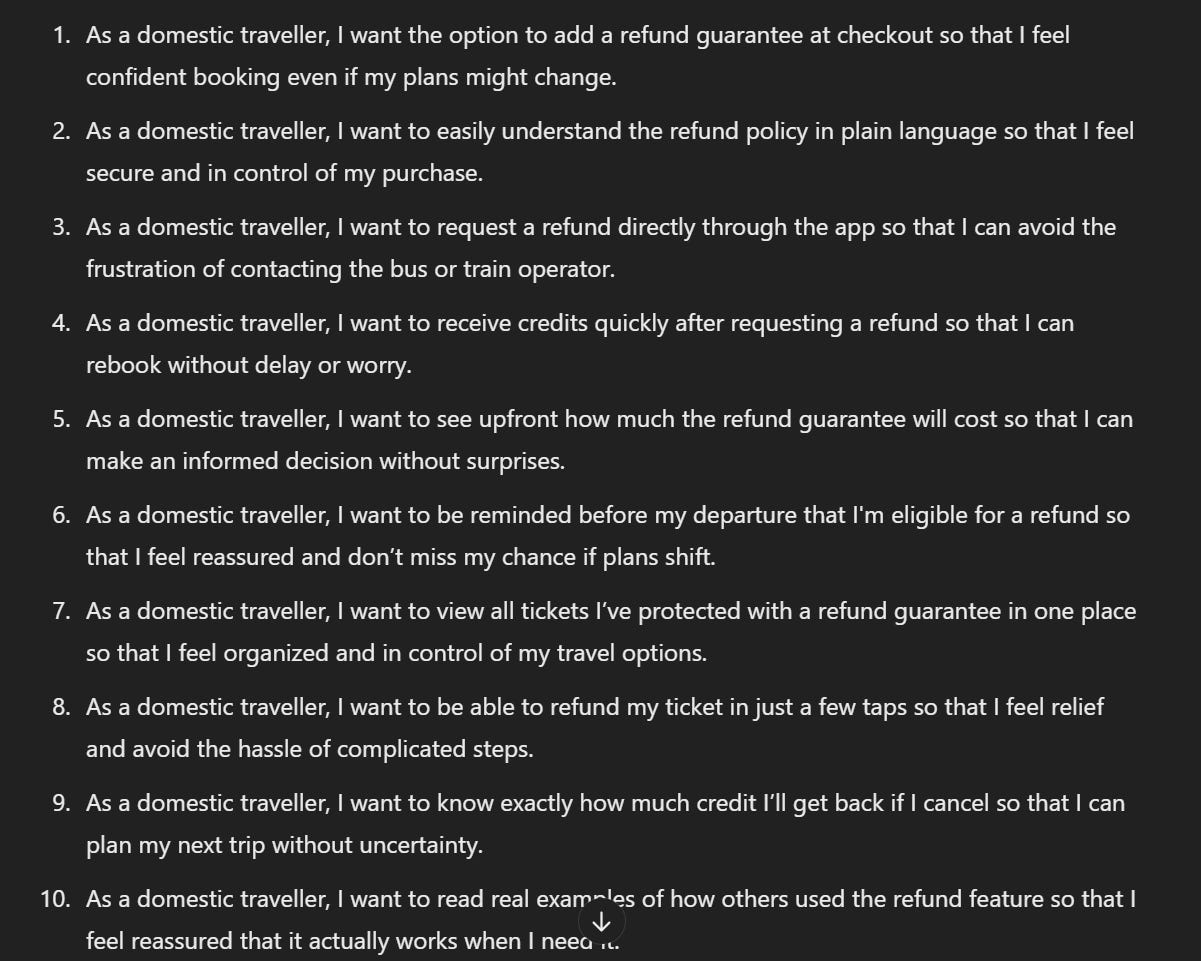

The prompt returned 10 detailed, user-centric, high-quality user stories. Just like it is supposed to.

Notice how this response is consistently focused on the domestic traveller persona – every single story. Each one addresses a specific need with clear benefits and actionable details. This is exactly what good user stories should look like.

Same prompt, two different accounts, but radically different responses.

That’s when we realized:

It wasn’t the prompt. And it wasn’t the input.

It had to be the way he was using the prompt.

Next: Check the Chat History

My next hunch? Maybe he wasn’t using a fresh chat window.

What a lot of PMs don’t realize is that long chats can dramatically affect output quality. Earlier context can leak into later responses – sometimes in ways that completely throw things off.

That’s why I always recommend starting a new chat for every new task.

But that wasn’t it either.

Steedan was using a new chat window. And he was still getting vague, inconsistent, unusable user stories.

At this point, I knew something else had to be influencing the results.

The Real Problem: AI Settings

Then it hit me.

We hadn’t checked his OpenAI settings.

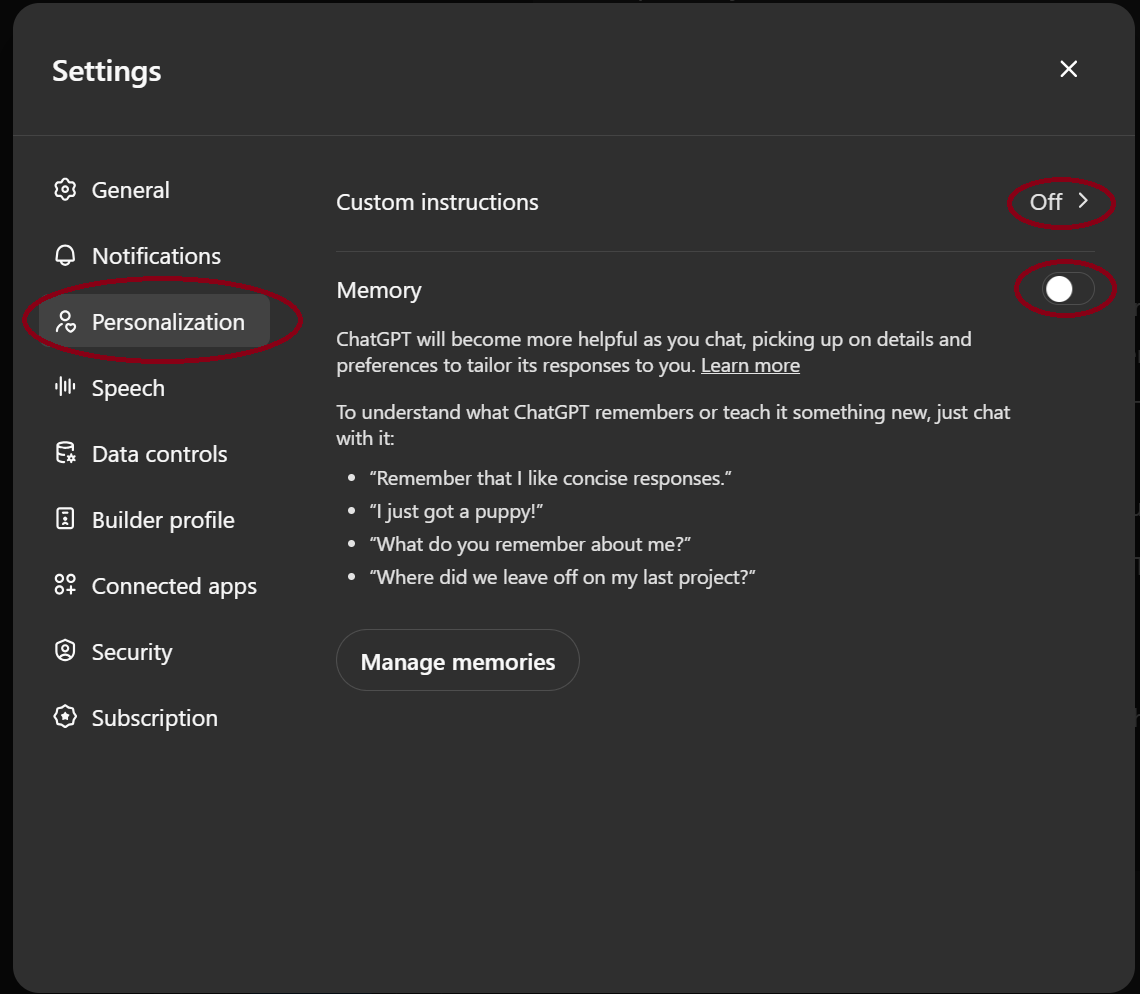

So I asked him to check two things:

- Custom Instruction: Was he sending additional instructions without realizing it?

- Memory: Was memory enabled?

While these features are designed to be helpful – custom instruction let you set default preferences, and memory collects information about you over time – they’re a double-edged sword.

I find that they interfere with the prompt, dilute response quality, and ultimately rob you of control over the output.

Here’s what we found:

- Custom Instructions was ON

- And Memory was ON

Conflicting Instructions, Confused Model

Here’s the kicker.

Steedan had been testing prompts like crazy for weeks. His memory was now overloaded with themes, styles, and preferences – from earlier prompts.

When we dug into his custom instructions, we found he had inadvertently requested:

“shorter responses, different perspectives, no excessive restating or details”

Compare that to what our user story prompt needed:

“detailed, focused responses for a single persona with clear pain points”

So every time he ran a “well structured user story” prompt…it wasn’t starting fresh.

It was being filtered through his custom instructions and layers of memory data.

No wonder his results were all over the place! The system was trying to follow two contradictory sets of instructions at once.

The Fix

We turned both personalization and memory off.

Then cleared out all past memory.

And then – we reran the prompt.

This time?

Boom.

10 highly specific, user centric, actionable user stories. Just like the prompt was designed to deliver.

Plus, one very happy Steedan.

Bottom Line

Two big lessons from this:

#1. Prompting is still a superpower.

No matter how advanced AI gets, the only way to interact with an LLM is through prompting.

If you want to squeeze the most value out of these systems, learning how to talk to them – so they give you exactly what you’re looking for – will put you leagues ahead of every other PM.

#2. Your AI settings matter.

Even the best prompt can get derailed by hidden system variables.

Settings like Memory and personalization can drastically influence your results—and not always in a good way. If you’ve ever felt like your prompts are “starting to slip”… check your settings first.

Take 5 minutes now to review your AI settings. It could save you hours of troubleshooting later.

Great prompting can move mountains. But not if the mountain has memory fog. Clear it out, and let your prompt do what it was built to do.